About CML

Motivation

The human brain is an extraordinarily dynamic and system that robustly integrates vast amounts of information from different and noisy sensory channels. From this immense quantity of raw data, the brain forms a unified and cohesive view of its universe. Even the very best systems in Artificial Intelligence (AI) and robotics have taken only tiny steps in this direction. Building a system that composes a global perspective from multiple distinct sources, types of data, and sensory modalities remains a grand challenge of AI, yet it is specific enough that it can be studied quite rigorously and in such detail that the prospect for deep insights into these mechanisms, if pursued rigorously, is quite plausible in the near future.

Crossmodal Learning

The term crossmodal learning refers to the adaptive, synergistic synthesis of complex perceptions from multiple sensory modalities, such that the learning that occurs within any individual sensory modality can be enhanced with information from one or more other modalities. Crossmodal learning is crucial for human understanding of the world, and examples are ubiquitous, such as: learning to grasp, and manipulate objects; learning to walk; learning to read and write; learning to understand language and its referents; etc. In all these examples, visual, auditory, somatosensory or other modalities have to be integrated, and learning must, therefore, be crossmodal. In fact, a broad range of acquired human skills are crossmodal, and many of the most advanced human capabilities, such as those involved in social cognition, require learning from the richest combinations of crossmodal information.

CML Transregional Collaborative Research Centre

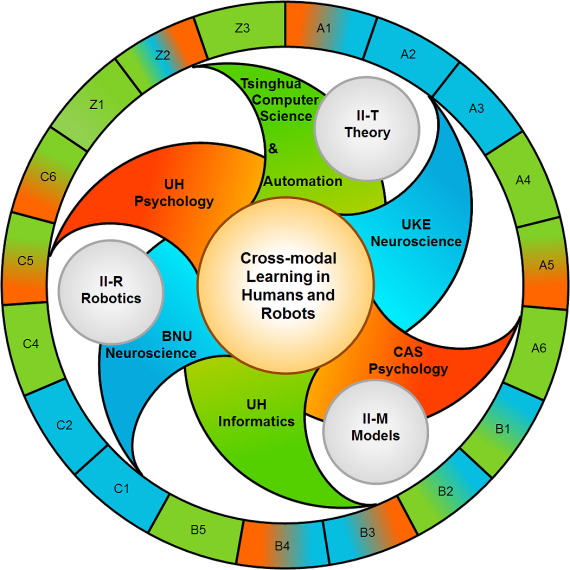

During the first phase of the interdisciplinary Transregional Research Centre (TRR 169), jointly funded by DFG (Deutsche Forschungsgemeinschaft) and NSFC (Natural Science Foundation of China), the topic of crossmodal learning has been established as a new research field. Earlier, crossmodal learning was a broad, multidisciplinary topic that had not yet coalesced into a single, unified field. Instead, there were many separate efforts, each tackling the concerns of crossmodal learning from its own perspective, with little overlap. To accelerate the convergence of these different areas, the research programme of the first phase of TRR 169 was built around a highly interdisciplinary approach by integrating groups from neuroscience, psychology and computer science. The research programme was organized into three main areas (adaptivity, prediction, and interaction) and working towards a set of six main objectives. Each project studied the integration of at least two different sensory modalities (e.g. vision and audition). Also, true to the core idea of a Transregio project, each subproject was jointly led by PIs from both Hamburg and Beijing, encouraging the exchange of ideas beyond countries and cultures.

The first phase of TRR169 was dedicated to the development of theory and models of crossmodal learning; in the second phase, we will move theory to models and models to systems. We will take the established collaboration between the partners from the Universität Hamburg, the University Medical Center Hamburg-Eppendorf and the three top universities in China (Tsinghua University, Peking University and Beijing Normal University) as well as the Institute of Psychology of the Chinese Academy of Sciences to achieve a higher level of understanding, modelling and implementing crossmodal systems. This is the crucial middle stage towards our overall long-term goal of understanding and unifying the neural, cognitive and computational mechanisms of crossmodal learning.